Millennials, assemble! Did you ever imagine in your childhood, computer screens interacting with your touch, voice, and facial recognition? Our screens used to be pretty basic, with a boring screen (now, then it was the thing!) and a cursor. All we knew were typing and clicking sounds while interacting with the electronic box. But now, there’s a radical change that our old selves won’t believe. Well, we’ve certainly come a long way! And that’s the power of technology and innovation.

First, touch screens and now touchless!

At the beginning of personal computing, human–machine communication was a dance of arrows, icons, and clicks. We typed commands. We clicked buttons. We accepted awkward feedback loops as the price of progress. With the onset of COVID-19, tech gadgets with minimal to no touch became popular, and touchless or zero user interfaces gained all the eyeballs.

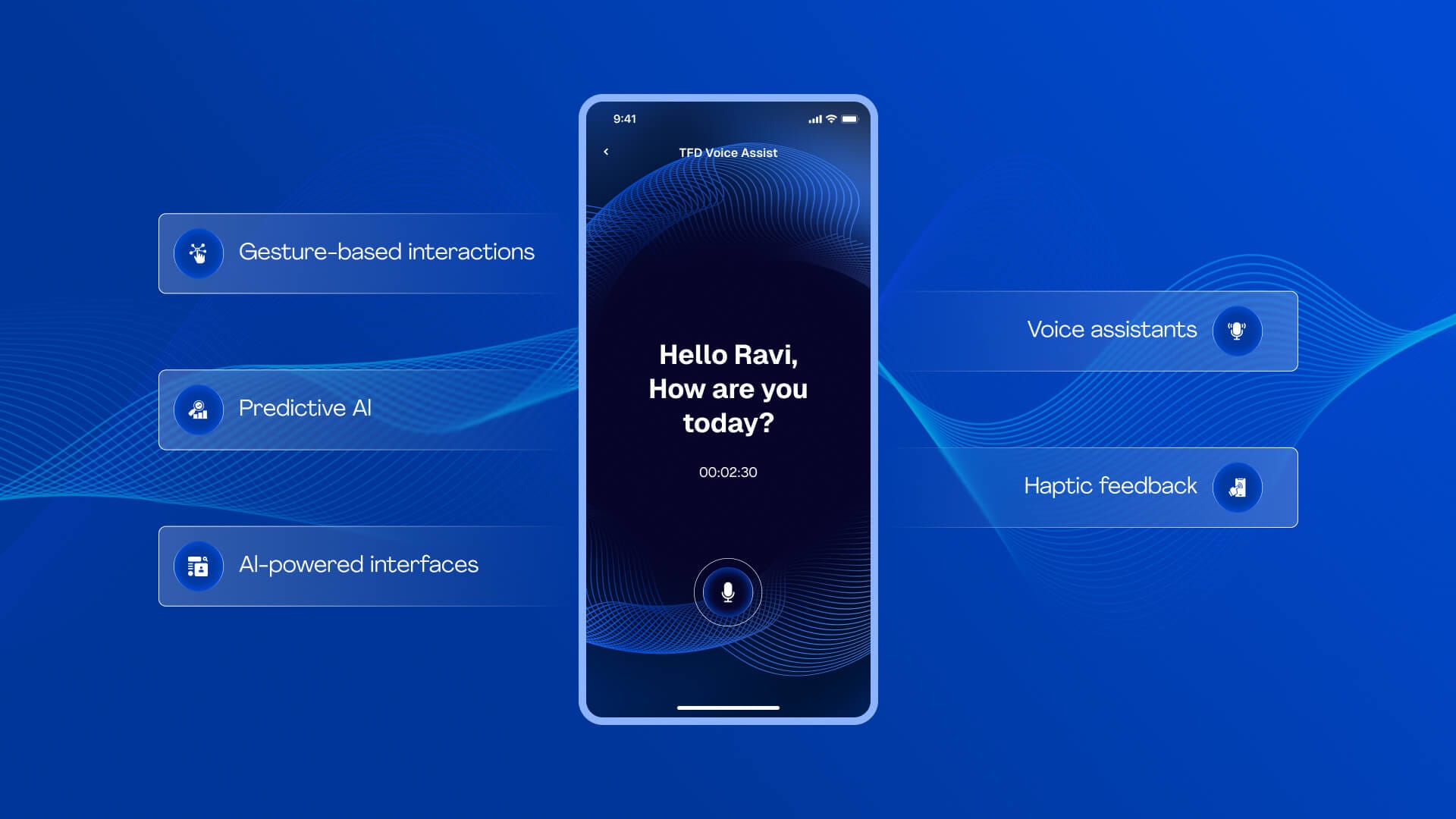

Fast forward to today, our devices are learning not just what we click or tap, but how we move, what we say, when we walk in, and even how our bodies feel. This transition, led by surges in artificial intelligence, edge computing, and sensor proliferation, has reformed experience architecture. We're orienting from what is explicit and visible to what is ambient and invisible.

What is Zero UI

Zero UI is a revolution in how systems observe intentions. In a Zero UI framework:

- Biometrics, gesture, voice, and environmental context swap clicks and swipes.

- Interactions are implied: they occur through sound, presence, movement, and even feelings.

- Interfaces blend into the environment; no need to open an app, the room knows if you need music or coffee.

Enterprises accepting this trend use lingo like ‘interfaces that fade away’ or ‘design that becomes ambient.’ The idea is not to shrink technology, but to seamlessly infuse it into our daily lives.

What’s Fuelling the Invisible Interface?

Three influential factors have congregated to force Zero UI into the mainstream:

- Voice recognition has risen with innovations in automatic speech recognition and natural language understanding. Modern smart speakers spark this boom. In APAC alone, 86 % of Chinese households owned a smart speaker in late 2023, with India at 20.9 % penetration. Internationally, the smart speaker market reached USD 13.7 billion in 2024 and can twice up by 2032.

- Computer vision and sensor fusion developments allow gadgets to ‘see’ and analyze facial expressions, gestures, and proximity. Apple Face ID and Google Soli’s achievement have established that the interpretation of physical input is becoming common.

- Edge AI and sensor miniaturization imply real-time processing, no round-trip to the cloud. Devices can operate faster, more privately, and more intuitively.

These tech developments allow systems to be context-aware, predicting your needs effortlessly.

Touchless vs. Contactless: Decoding the Difference

In the context of Zero UI, ‘touchless’ and ‘contactless’ have discrete significance:

- Touchless emphasizes gesture-driven interaction, think waving a hand to unlock a phone

- Contactless refers to proximity-based systems, such as tapping a transit card

Zero UI influences both, but touchless is more about expressiveness and intent, while contactless is about convenience and efficiency.

Zero UI in Our Lives

Amazon Go

First debuted in Seattle in January 2018, Amazon Go eliminated checkout lanes entirely. Cameras mounted above the ceiling and weight sensors track what you pick up, automatically billing your account. Retail analysts predicted that Go stores make approximately USD 1.5 million per store annually, about 50 % higher than traditional grocery stores. Even with hardware outlays exceeding USD 1 million per store and backend support costs in India, Go’s potential to reach USD 4.5 billion annually (with 3,000 sites) says a lot about disruption in the market.

But the technology has not been utterly out of sight. Amazon has rescaled on cashierless systems in Fresh stores, and is heading towards hybrid models, and employs human verification to assure accuracy. This means that completely invisible systems, though aspiring, still need human intervention.

Smart Homes & Wearables

Modern homes now respond to your presence: lights, thermostats, music, all triggered by voice or gesture. 86 % smart speaker adoption in China is the epitome of tech-leadership integration. Meanwhile, wearables are also catching up. On Reddit, one commenter stated: “71 % of wearable device owners expect they’ll perform more voice searches in the future… Voice is predicted to be a USD 45 billion channel by 2028.” By 2025, a projected 75 % of households will possess smart speakers, making voice a benchmark interface and not a rarity.

Cybersecurity: Invisible Doesn’t Mean Insecure

While Zero UI intends to make the user experience simpler, it also easily opens doors for cyber risks. Traditional verification standards like passwords or PINs may not apply in gesture or voice-based systems. This introduces unique vulnerabilities.

For example, voice spoofing, where attackers employ artificial or pre-recorded voices to mislead systems, has already shown up in banking and smart home automation environments. Likewise, facial recognition systems can be spoofed with high-definition photos or deepfake videos. Sensors, nevertheless advanced, are also exposed to spoofing or jamming, which can trigger unwanted responses or prevent legitimate ones.

Additionally, Zero UI systems tend to be always-on and always-listening or observing, thus increasing the likelihood of data collection without the user’s knowledge. This concept, termed ‘privacy bleed,’ can lead to the storage of sensitive information, behaviors, or even moods.

To achieve Zero UI, developers must adapt:

- Multi-modal authentication (such as combining voice, device ID, and user behavior patterns)

- Strong universal encryption for every sensory data stream

- Privacy-by-design principles under which data minimization is a basis, not an add-on

- Periodic sensor recalibration to avoid environmental manipulation

Zero UI security is a matter of securing perception.

The UX Imperative: Designing without Screens

Zero UI design defies traditional thinking about user interfaces. If the interface is not visible, then the feedback becomes critical. Users must feel that an action has occurred, even if no screen flashes or buttons click.

This is about reimagining feedback as light, sound, haptics, or environmental change. A room that dims lights when a meeting starts, a sound that plays slowly when a door unlocks, these are examples of subtle but powerful UX design.

Moreover, designers must develop for multiple modalities. Not everybody is comfortable with voice, and not every gesture is cross-cultural. Inclusive design is the emphasis in Zero UI, ensuring that elderly users, specially abled users, or those in noisy environments can still control their experiences.

UX designers also need to develop frameworks around anticipation, mapping out user journeys that depend not only on obvious actions but also on behavior patterns and environmental influences. And when the system fails, as it inescapably will, there must be graceful fallback options that allow users to regain control.

From Concept to Product: Zero UI Roadmaps

Designing Zero UI-oriented products is a strategic roadmap that blends design thinking, data science, and real-world context. It’s not simply about giving voice commands or sensors, it’s about rethinking the entire product experience.

Startups and enterprises need to begin by identifying high-friction user moments that can be enhanced through ambient or touchless methods. For instance, entry access, payment transactions, and customer service communications, all of these can be redesigned with Zero UI.

Engineering teams need to build fail-safes, such as redundant triggers (e.g., voice plus gesture) or clear opt-in points for users. Extensive research in diverse real-life environments is crucial. A voice assistant that functions in a lab may stumble in a messy kitchen or on a busy train.

Business and legal stakeholders need to step in early to assess data policies, regulatory compliance, and liability. With Zero UI, the user experience is hidden, but the risk landscape becomes more complex.

Ethical Implications of Zero UI in Design

As we transition to ambient and predictive systems, the ethics become murkier. When systems gather data indirectly, from voice, motion, or emotion, how do we determine consent?

One big issue is informed awareness. If a Zero UI system reacts to unintentional gestures or listens for cues uninterruptedly, users may not even know they’re interacting with a system at all. This blurs the line between user interaction and monitoring.

Additionally, data bias in machine learning models can result in discrimination. Facial recognition tech has been proven to misidentify persons from non-white ethnic groups recently. When these systems are invisible, the potential for biased outcomes grows insidious.

Ethical design in Zero UI must therefore include:

- Transparent disclosure of what, how, and why is being monitored

- User control for data capturing frequency and its retention

- Regular bias audits in data and algorithms

- Ethical review boards within product teams to assess the deployment implications

Designing invisibility must not mean avoiding responsibility. On the contrary, invisible technology must meet a higher bar of ethical scrutiny.

What Next: The Decade of Invisible Tech

Zero UI is just the beginning. The next decade will take us from invisible interfaces to intelligent spaces, spaces that understand, predict, and respond without instruction.

Neural interfaces, like Elon Musk’s Neuralink or Meta’s brain-to-computer projects, are all underway. These interfaces will capture user intent straight from brain activity, eliminating the need for gesture or speech altogether.

Emotion AI is also on the move up. By analyzing vocal tone, facial expressions, and physiological signals, systems could soon detect stress, excitement, or confusion, and adjust interfaces accordingly.

The initiation of ubiquitous edge computing means that intelligence will not be central in servers, but implanted into walls, apparel, glasses, and even the air around us. And LiDAR-powered, real-time-rendering volumetric AR will make it possible for us to interact with floating 3D interfaces without the need for wearables.

While thrilling, these technologies will demand considerable regulations, profound interdisciplinary partnerships, and a transformed effort on human well-being. Because in the future of Zero UI, the interface may disappear, but humans must never be invisible.

Concluding Insights

Zero UI is not another design trend, but a reconceptualization of technology’s position in human life. Where once screens and buttons ruled every interaction, soon intent, presence, and context will suffice. Still, the path is neither simple nor purely technological: trust, security, ethics, and acceptance must be intentionally crafted into every design.

As Invisible UI becomes conventional, UX designers, technologists, and strategists will need to grasp not just markup and layout, but ambient experience, sensor fusion, and intent architecture. The most elegant interface in this world doesn’t show itself. It adapts, responds, and disappears, leaving only the wonderful experience.

Ravi Talajiya

CEO of TheFinch

With over a decade of experience in digital design and business strategy, Ravi leads TheFinch with a vision to bridge creativity and purpose. His passion lies in helping brands scale through design thinking, innovation, and a deep understanding of user behavior.